San Francisco City Attorney Sues Sites That "Undress" Women With AI

https://cointelegraph.com/news/san-francisco-attorney-sues-ai-sites-undress-women

San Francisco’s City Attorney has filed a lawsuit against the owners of 16 websites that have allowed users to “nudify” women and young girls using AI.

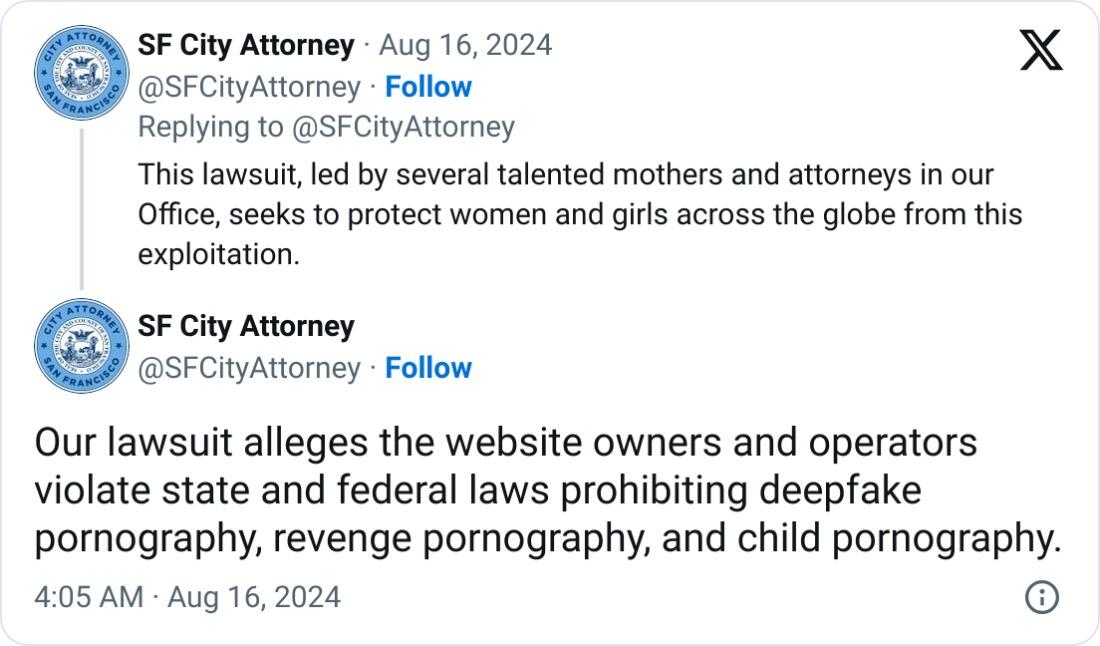

The office of San Francisco City Attorney David Chiu on Aug. 15 https://www.sfcityattorney.org/2024/08/15/city-attorney-sues-most-visited-websites-that-create-nonconsensual-deepfake-pornography/#:~:text=SAN%20FRANCISCO%20(August%2015%2C%202024,distribute%20nonconsensual%20AI%2Dgenerated%20pornography

he was suing the owners of 16 of the “most-visited websites” that allow users to “undress” people in a photo to make “nonconsensual nude images of women and girls.”

A redacted version of the suit https://www.sfcityattorney.org/wp-content/uploads/2024/08/Complaint_Final_Redacted.pdf

and Estonia who have violated California and United States laws on deepfake porn, revenge porn and child sexual abuse material.

The websites are far from unknown, either. The complaint claims that they have racked up https://cointelegraph.com/news/wall-street-ai-bubble-crypto-projects

in just the first half of the year.

One website boasted that it allows its users to “see anyone naked.” Another says, “Imagine wasting time taking her out on dates when you can just use [the website] to get her nudes,” according to the complaint.

Source: https://x.com/SFCityAttorney/status/1824145474355597783

The https://cointelegraph.com/learn/what-is-black-box-ai

used by the sites are trained on images of porn and child sexual abuse material, Chiu’s office said.

Essentially, someone can upload a picture of their target to https://cointelegraph.com/learn/deepfakes-how-to-spot-fake-audio-and-video

, pornographic version of them. Some sites limit their generations to adults only, but others even allow images of children to be created.

Chiu’s office said the images are “virtually indistinguishable” from the real thing and have been used to “extort, bully, threaten, and humiliate women and girls,” many of which have https://cointelegraph.com/news/new-bill-suggests-standardized-watermark-content-combat-ai-deepfakes

the fake images once they’ve been created.

In February, AI-generated nude images of 16 eighth-grade students — who are typically 13 to 14 years old — were shared around by students at a California middle school, it said.

In June, ABC News https://www.abc.net.au/news/2024-06-11/bacchus-marsh-grammar-explicit-images-ai-nude/103965298

Victoria Police arrested a teenager for allegedly circulating 50 images of grade nine to 12 students who attended a school outside Melbourne, Australia.

“This investigation has taken us to the darkest corners of the internet, and I am absolutely horrified for the women and girls who have had to endure this exploitation,” said Chiu.

“We all need to do our part to crack down on bad actors using AI to exploit and abuse real people, including children,” he added.

Chiu said that AI has “enormous promise,” but there are criminals that are exploiting the technology, adding, “We have to be very clear that this is not innovation — this is sexual abuse.”

https://cms.zerohedge.com/users/tyler-durden

Fri, 08/16/2024 - 15:40

https://www.zerohedge.com/technology/san-francisco-city-attorney-sues-sites-undress-women-ai